Blind Facebook Engineer Leads Technology Project that Helps Verbalize What is an Image

January 23, 2018

A Facebook team led by a blind engineer may hold the key to one of the most pressing problems on the internet: Screening images and videos for inappropriate content.

"More than 2 billion photos are shared across Facebook every single day," Facebook engineer Matt King said. "That's a situation where a machine-based solution adds a lot more value than a human-based solution ever could."

King's team is building solutions for visually impaired people on the platform, but the technology could eventually be used to identify images and videos that violate Facebook's terms of use, or that advertisers want to avoid.

King's passion stems in part from his own challenges of being a blind engineer.

He was born with a degenerative eye disease called retinitis pigmentosa. As a child King could see fine during the day, but could not see anything at night. Soon that progressed to only being able to read with a bright light, then with a magnification system. He used a closed circuit TV magnification system to finish his degree.

By the time he went to work at IBM as an electrical engineer in 1989, he had lost all his vision. King started volunteering with IBM's accessibility projects, working on a screen reader to help visually impaired people "see" what is on their screens either through audio cues or a braille device. IBM eventually developed the first screen reader for a graphical interface which worked with its operating system OS/2.

One of the lead researchers noticed King was passionate about the project, so he asked him to switch to the accessibility team full time in 1998. He eventually caught the eye of Facebook, who hired him from IBM in 2015.

"What I was doing was complaining too much," King said. "I just wanted things to be better."

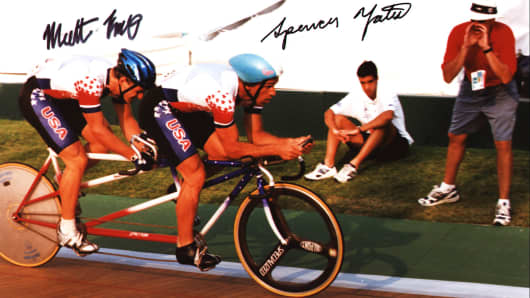

King is used to making the world adapt to him. The avid cyclist competed in the Atlanta, Sydney, and Athens Paralympic games, and plays the piano. On the request of his wife and two children, his family remained in Bend, Oregon after Facebook hired him. To get to Facebook's Menlo Park office, King hitches a ride with friend with a pilot's license who works at Google.

Matt King at the 1996 Paralympics in Atlanta, Georgia.

Matt King at the 1996 Paralympics in Atlanta, Georgia.

Automated alt-text

King's IBM work revolved around creating the Accessible Rich Internet Applications standards, what he called "the plumbing for accessibility on the Web."

Now he works on features to help people with disabilities use Facebook, like adding captions on videos or coming up with ways to navigate the site using only audio cues.

"Anybody who has any kind of disability can benefit from Facebook," King said. "They can develop beneficial connections and understand their disability doesn't have to define them, to limit them."

One of his main projects is "automated alt-text," which describes audibly what is in Facebook images.

When automated alt-text was launched in April 2016, it only available for five languages on the iOS app, and was only able to describe 100 basic concepts like whether something was indoors or outdoors, what nouns were in the picture, and some basic adjectives like smiling.

Today it is available in over 29 languages on Facebook on the web, iOS and Android. It also has a couple hundred concepts in its repertoire, including over a dozen more complex activities like sitting, standing, walking, playing a musical instrument or dancing.

"The things people post most frequently kind of has a limited vocabulary associated with it," King said. "It makes it possible for us to have one of those situations where if you can tackle 20 percent of the solution, it tackles 80 percent of the problem. It's getting that last 20 percent which is a lot of work, but we're getting there."

Using artificial intelligence to see

Though automatic alt-text is configured for blind and low vision users, solving for image recognition issues with artificial intelligence can benefit everyone.

In December 2017, Facebook pushed an automatic alt-text update that used facial recognition to help visually impaired people find out who is in photos. That technology can also help all users find photos of themselves they were not tagged in, and identify fraudsters who use a person's photo as their profile picture without permission.

Allowing technology to "see" images may also help identify if content is safe for all users or if it's okay to advertise on. Content adjacency — or the images and videos that ads appear next to — has become a big issue for advertisers after reports showed ads running next to inappropriate content on YouTube.

The issue arises because it's not easy for computer programs to understand context, said Integral Ad Science (IAS) chief product officer David Hahn. Software has a hard time telling if an image of a swastika is on a Wikipedia page about the topic, part of a story on Nazism or on a flag being marched around in a protest, he said. It gets even more complicated when advertisers and their needs are involved: they amy want to advertise against a movie trailer that contains violence but not next to real-world violence from a protest.

Most image recognition tech relies on terms called metadata, which are tagged to the image called, metadata and other clues like text or audio on the page, Hahn said. Video is typically analyzed by taking a random sample of still images from the clip and examining them to determine if it's the video is okay overall.

"There are varying degrees of accuracy and sophistication," Hahn said. It takes a lot of different treatments on images or text. There's not one source or one perspective that should be taken as gospel."

Facebook's automated alt-text still relies on a staff of people telling the technology what certain images are, Facebook's King explained. But the machine's algorithms and recall rate — the frequency with which images are positively identified — is improving. And as it begins to understand more about context, it's getting closer to a day where it will need little to no human help.

Source: CNBC